Showing posts tagged Eideticker

Quick update on my last post about finding some kind of camera suitable for use in automated performance testing of fennec and b2g with eideticker. Shortly after I wrote that, I found out about a company called Point Grey Research which manufactures custom web cameras intended for exactly the sorts of things we’re doing. Initial results with the Flea3 camera I ordered from them are quite promising:

(the actual capture is even higher quality than that would suggest some detail is lost in the conversion to webm)

There seems to be some sort of issue with the white balance in that capture which is causing a flashing effect (I suspect this just involves flipping some kind of software setting: still trying to grok their SDK), and we’ll need to create some sort of enclosure for the setup so ambient light doesn’t interfere with the capture but overall I’m pretty optimistic about this baby. 60 frames per second, very high resolution (1280x960), no issues with HDMI (since it’s just a USB camera), relatively inexpensive.

[ For more information on the Eideticker software I’m referring to, see this entry ]

Ok, so as I mentioned last time I’ve been looking into making Eideticker work for devices without native HDMI output by capturing their output with some kind of camera. So far I’ve tried four different DSLRs for this task, which have all been inadequate for different reasons. I was originally just going to write an email about this to a few concerned parties, but then figured I may as well structure it into a blog post. Maybe someone will find it useful (or better yet, have some ideas.)

Elmo MO–1

This is the device I mentioned last time. Easy to set up, plays nicely with the Decklink capture card we’re using for Eideticker. It seemed almost perfect, except for that:

- The image quality is really bad (beaten even by $200 standard digital camera). Tons of noise, picture quality really bad. Not *necessarily* a deal breaker, but it still sucks.

- More importantly, there seems to be no way of turning off the auto white balance adjustment. This makes automated image analysis impossible if the picture changes, as is highlighted in this video:

Canon Rebel T4i

This is the first camera that was recommended to me at the camera shop I’ve been going to. It does have an HDMI output signal, but it’s not “clean”. This blog post explains the details better than I could. Next.

Nikon D600

Supposedly does native clean 720p output, but unfortunately the output is in a “box” so isn’t recognized by the Decklink cards that we’re using (which insist on a full 1280×720 HDMI signal to work). Next.

Nikon D800

It is possible to configure this one to not put a box around the output, so the Decklink card does recognize it. Except that the camera shuts off the HDMI signal whenever the input parameters change on the card or the signal input is turned on, which essentially makes it useless for Eideticker (this happens every time we start the Eideticker harness). Quite a shame, as the HDMI signal is quite nice otherwise.

—

To be clear, with the exception of the Elmo all the devices above seem like fine cameras, and should more than do for manual captures of B2G or Android phones (which is something we probably want to do anyway). But for Eideticker, we need something that works in automation, and none of the above fit the bill. I guess I could explore using a “real” video camera as opposed to a DSLR acting like one, though I suspect I might run into some of the same sorts of issues depending on how the HDMI output of those devices behaves.

Part of me wonders whether a custom solution wouldn’t work better. How complicated could it be to construct your own digital camera anyway? 😉 Hook up a fancy camera sensor to a pandaboard, get it to output through the HDMI port, and then we’re set? Or better yet, maybe just get a fancy webcam like the Playstation Eye and hook it up directly to a computer? That would eliminate the need for our expensive video capture card setup altogether.

[ For more information on the Eideticker software I’m referring to, see this entry ]

Here’s a long overdue update on where we’re at with Eideticker for FirefoxOS. While we’ve had a good amount of success getting useful, actionable data out of Eideticker for Android, so far we haven’t been able to replicate that success for FirefoxOS. This is not for lack of trying: first, Malini Das and then me have been working at it since summer 2012.

When it comes right down to it, instrumenting Eideticker for B2G is just a whole lot more complex. On Android, we could take the operating system (including support for all the things we needed, like HDMI capture) as a given. The only tricky part was instrumenting the capture so the right things happened at the right moment. With FirefoxOS, we need to run these tests on entire builds of a whole operating system which was constantly changing. Not nearly as simple. That said, I’m starting to see light at the end of the tunnel.

Platforms

We initially selected the pandaboard as the main device to use for eideticker testing, for two reasons. First, it’s the same hardware platform we’re targeting for other b2g testing in tbpl (mochitest, reftest, etc.), and is the platform we’re using for running Gaia UI tests. Second, unlike every other device that we’re prototyping FirefoxOS on (to my knowledge), it has HDMI-out capability, so we can directly interface it with the Eideticker video capture setup.

However, the panda also has some serious shortcomings. First, it’s obviously not a platform we’re shipping, so the performance we’re seeing from it is subject to different factors that we might not see with a phone actually shipped to users. For the same reason, we’ve had many problems getting B2G running reliably on it, as it’s not something most developers have been hacking on a day to day basis. Thanks to the heroic efforts of Thomas Zimmerman, we’ve mostly got things working ok now, but it was a fairly long road to get here (several months last fall).

More recently, we became aware of something called an Elmo which might let us combine

the best of both worlds. An elmo is really just a tiny mounted video camera with a bunch of outputs, and is I understand most commonly used to project documents in a classroom/presentation setting. However, it seems to do a great job of capturing mobile phones in action as well.

The nice thing about using an external camera for the video capture part of eideticker is that we are no longer limited to devices with HDMI out — we can run the standard set of automated tests on ANYTHING. We’ve already used this to some success in getting some videos of FirefoxOS startup times versus Android on the Unagi (a development phone that we’re using internally) for manual analysis. Automating this process may be trickier because of the fact that the video capture is no longer “perfect”, but we may be able to work around that (more discussion about this later).

FirefoxOS web page tests

These are the same tests we run on Android. They should give us an idea of roughly where our performance when browsing / panning web sites like CNN. So far, I’ve only run these tests on the Pandaboard and they are INCREDIBLY slow (like 1–3 frames per second when scrolling). So much so that I have to think there is something broken about our hardware acceleration on this platform.

FirefoxOS application tests

These are some new tests written in a framework that allows you to script arbitrary interactions in FirefoxOS, like launching applications or opening the task switcher.

I’m pretty happy with this. It seems to work well. The only problems I’m seeing with this is with the platform we’re running these tests on. With the pandaboard, applications look weird (since the screen resolution doesn’t remotely resemble the 320×480 resolution on our current devices) and performance is abysmal. Take, for example, this capture of application switching performance, which operates only at roughly 3–4 fps:

So what now?

I’m not 100% sure yet (partly it will depend on what others say as well as my own investigation), but I have a feeling that capturing video of real devices running FirefoxOS using the Elmo is the way forward. First, the hardware and driver situation will be much more representative of what we’ll actually be shipping to users. Second, we can flash new builds of FirefoxOS onto them automatically, unlike the pandaboards where you currently either need to manually flash and reset (a time consuming and error prone process) or set up an instance of mozpool (which I understand is quite complicated).

The main use case I see with eideticker-on-panda would be where we wanted to run a suite of tests on checkin (in tbpl-like fashion) and we’d need to scale to many devices. While cool, this sounds like an expensive project (both in terms of time and hardware) and I think we’d do better with getting something slightly smaller-scale running first.

So, the real question is whether or not the capture produced by the Elmo is amenable to the same analysis that we do on the raw HDMI output. At the very least, some of eideticker’s image analysis code will have to be adapted to handle a much “noisier” capture. As opposed to capturing the raw HDMI signal, we now have to deal with the real world and its irritating fluctuations in ambient light levels and all that the rest. I have no doubt it is *possible* to compensate for this (after all this is what the human eye/brain does all the time), but the question is how much work it will be. Can’t speak for anyone else at Mozilla, but I’m not sure if I really have the time to start a Ph.D-level research project in computational vision. 😉 I’m optimistic that won’t be necessary, but we’ll just have to wait and see.

[ For more information on the Eideticker software I’m referring to, see this entry ]

Just wanted to give some updates on a few new Eideticker features which have landed in the past week.

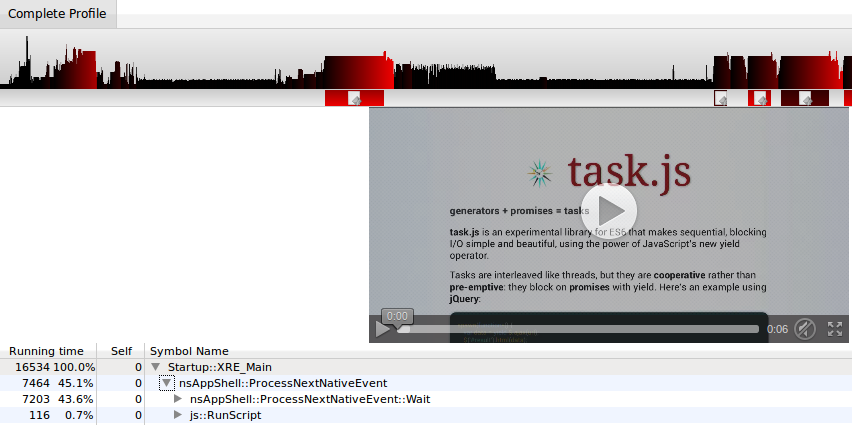

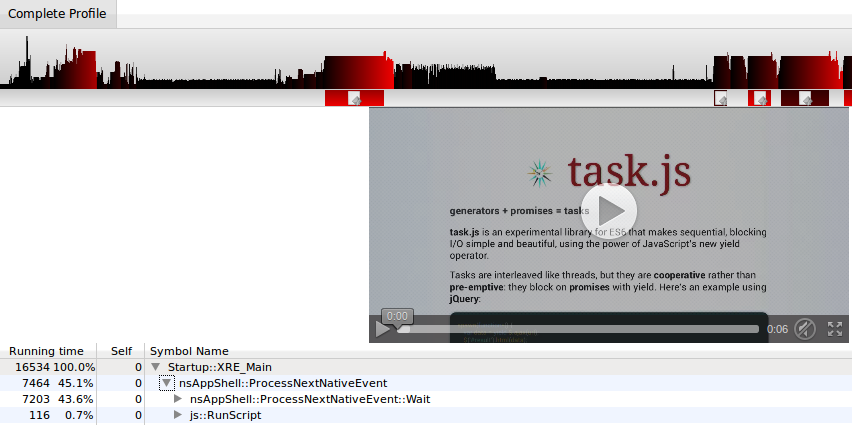

Profiling support

While Eideticker is a great tool for observing the external behaviour of the mobile browser, it hasn’t been able to tell us much about what’s going on inside. If something’s slow, why is it slow? If it’s slower than it was the day before, what’s the cause? If it’s faster? What explains the deviations in test results from one run to the other?

Thanks to Benoit Girard (+ a little bit of integration work from me), we can now start providing answers to these questions. Eideticker now has a mode that allows us to capture a sampling profile of the application while the video capture is ongoing. From the dashboard, you can now get access said profile, just by clicking on a link.

Note that the profile is not yet synchronized precisely to the videocapture (the profile works over the entire run of the browser), but Benoit is busily making that happen. That should hopefully land soon, in the mean time we still have some pretty useful data.

To say I’m excited about this would be an understatement. I think it has the potential to open up a whole new world of understanding of why our mobile (and desktop, someday) browser performs the way it does.

Startup / pageload testing

Eideticker has had support for measuring startup and page load time for a few months now, but I hadn’t yet hooked it up to the dashboard. As of today, it now is. There’s a bunch of different angles that are interesting to measure here (new vs. old profiles, whether the browser has been launched since boot, launching web applications, loading about:home or loading a web page, …) which I’ll get to in due course. For now, we at least have a baseline of how long it takes to see the Firefox homescreen on a Galaxy Nexus:

Of course, this is hooked up to the profiling support previously mentioned. Here’s an example profile.

I’ve already filed one bug based on the data gathered so far.

[ For more information on the Eideticker software I’m referring to, see this entry ]

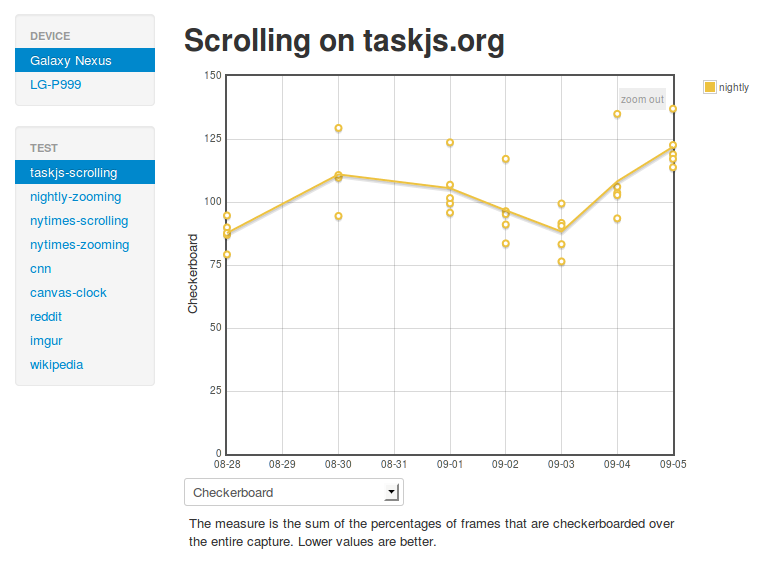

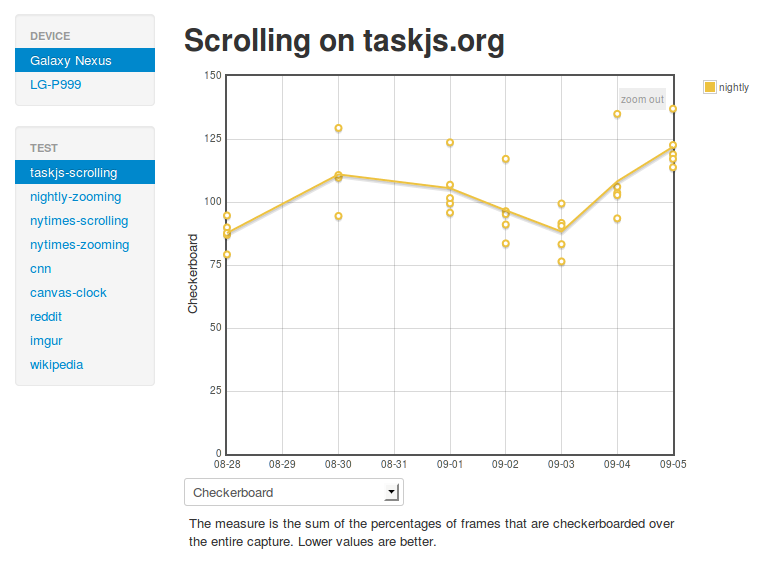

More on this to come, but just a quick note that the client-side URL schema for the Eideticker dashboard has been changed, as we now gather benchmarks for more than one device (Samsung Galaxy Nexus benchmarks FTW). To get the new and improved dashboard, please just go to the root:

http://wrla.ch/eideticker/dashboard

Old style URLs like http://wrla.ch/eideticker/dashboard/#/taskjs-scrolling/checkerboard will no longer work. Sorry for any broken links, this is the price of progress. 😉

Note that some benchmarks for the Galaxy Nexus are still missing. This is a known problem and will be fixed soon.

[ For more information on the Eideticker software I’m referring to, see this entry ]

I just merged a new approach I’ve been using to simulate touch events into the master branch of Eideticker called Orangutan.

As I’ve mentioned before, we really need to simulate actual user gestures when doing this type of testing to measure real-world performance with Eideticker. Up to now, I’ve been using google’s MonkeyRunner tool to do this. I was always a little skeptical about its approach (which involved using a privileged tool written in Java with special access to Android’s windowing system), but up until recently I’d managed to get around its issues with a successively more complicated series of hacks.

Unfortunately, I finally came up with a problem that I couldn’t figure out how to fix: monkeyrunner doesn’t attach precise timing information to the events it generates, which completely throws off Google Chrome for Android when you try to simulate a pan gesture. I’ve tried just about every way of using the existing functionality (both the networked mode and the “script” mode), but nothing seemed to help. My conclusion is that the only way of continuing to use monkey would be to create a fix for the software itself, which implies forking and extending the entire Android Open Source Project. As noble a goal as that might be, doing that across all the major Android versions I want to support (2.2, 2.3, 4.0 and now 4.1) was more work than I felt like taking on (not to mention the question of how to deploy that work). I decided to build something entirely new which did not have this requirement.

Enter Orangutan. Unlike MonkeyRunner, Orangutan simply injects events directly into low-level the kernel device file that represents an Android device’s touch screen. It’s fast (written in native C), trivial to build, and seems to work seamlessly with any application I’ve tried using it with (including Google Chrome for Android). Most interestingly for Mozilla, this interface is also present on Firefox OS (Boot to Gecko) based devices, so we should be able to use Orangutan there to support both Eideticker and any other testing framework which needs to test real-world user input test cases. Exciting times!

A while back, I began work on a new test framework for mobile browsers called Eideticker, which aims to benchmark browsers by capturing them on HMDI video, then running image analysis on the result. I wrote about this in a blog post entitled, “Measuring what the user sees.” Some seven months later, we are about to release a new version of Firefox for Android and Eideticker has played a major role in qualifying its performance and identifying areas for improvement along the way.

I thought it would be worth publicizing some of the results that we have seen so far and explain why Eideticker has been useful. This post aims to explain the ideas behind Eideticker and hopes to inspire ideas on how to further improve objective cross-browser benchmarks.

Idea 1: Put cross-browser performance tests on a more rigorous footing

One of the problems with existing benchmarks is that the graphical performance that they measure is entirely synthetic. So when something like Microsoft’s fishbowl demo claims 50 frames per second, that is based entirely on an internal measurement. There is no guarantee that is what the user is actually seeing. For all we know, it could be throwing half those frames away. To say nothing of the fact that measuring the results could interfere with the results themselves!

With Eideticker, we only analyze what the user sees (under the assumption that what the user sees is what comes out of the device’s HDMI out). To measure frame rate, Eideticker painstakingly analyzes a video capture to see the difference between each frame. Only if one frame is qualitatively different than the one before it will it consider it to be a step forward in the animation progression. There is no room for a browser to “cheat” by, for example, throwing a frame away. Here are two example frames from an Eideticker capture of an animated clock, along with a visual representation of the difference it measured between them:

Note: The red area of the graphic on the right indicates a region that Eideticker has detected as having changed between the two images. The black area of the graphic indicates a region that is unchanged.

Seeing visual evidence like this increases our confidence that things are truly better than they were before. For example, Eideticker very clearly, and accurately, measured the improvement when Off Main Thread Compositing landed. We saw a big performance jump in the afore-mentioned clock benchmark:

Note: Nightly = the new Firefox for Android as of that date (an incremental step towards what was just released), XUL = Previous Firefox for Android, Stock = Default browser that comes with Android 2.2.

Idea 2: Measure subjective performance based on actual user interaction patterns

Measuring real browser output is useful, but the problem is that these benchmarks do not actually measure how a user experiences the Web. Does an animated image of a clock or a fishtank actually represent a normal user experience? Thanks to the development of mobile gaming, this is slowly changing, but I was still say “no.” The majority of users time spent mobile browsing is spent on news websites, Wikipedia, Facebook and I Can Haz Cheezburger. For these sites, I would submit that there are two things users care about:

-

When I swipe to move the content (e.g. to scroll down to see more content on CNN.com), does it respond instantaneously and in a pleasing manner? Do I see a nice smooth animation or a jerky mess?

-

When the content moves, do I see new content right away? Or do I have to wait a few moments while the view updates (this slow load is also called checkerboarding)?

I think the key here is to measure what the user sees. Internal measurements for anything other than what the user experiences are misleading and inconsistent across browsers.

For the first item, I believe the best indication of perceived responsiveness and smoothness is found by measuring the number of frames that are displayed in response to user interaction. As with all measurements, it is not perfect, but one can safely say that if there are only a few frames changed in response to a swipe, the experience is going to be jerky.

Compare these two videos of panning on CNN.com. The first is the previous Firefox for Android, clocking in at about 12.3 frames per second (fps). The second is the recent Firefox for Android , clocking in at 23 fps using Eideticker’s internal measurements:

For the second, we can easily measure how quickly a user sees content by setting the background color of the page to an obvious color (in Eideticker we use magenta), overlaying an image on top, then measuring the amount of the page that is magenta while panning around. Since all modern browsers just use the background color of the page when something is to be redrawn (or at least can be configured that way), it’s easy to compare across browsers. You can see in the videos above that the new Native Firefox does much better than the old one in this regard, at least on this benchmark. Here’s an image of Eideticker detecting checkerboarding on a capture (red indicates checkerboarded areas):

Note: The red area of the graphic on the right indicates a region that Eideticker has detected as checkerboarded (i.e. undrawn). The black area of the graphic indicates a region that is fully drawn.

The important thing in both cases is that these captures represent a real user experience. The swipe gestures are synthesized such that they are interpreted by Android as an actual touch event. This is important: using tricks like javascript scrollTo inside your Web page to pan would not actually engage the renderer in quite the same way. On Firefox for Android (and probably other browsers as well, though I haven’t checked), the response to a touch event is always handled inside the browser internal rendering engine to give the expected “physical feel.” Manually moving the viewport would give completely different results since so much of the fancy code we use to reduce the redraw delay would not be engaged.

Conclusion

Overall, I am very happy with how Eideticker has allowed us to track and measure improvements in Firefox for Android. In developing Eideticker, we aimed to create a benchmark that measured how users actually interact with a browser – not how abstract measurements claim how great a browser is. As we move ahead with new projects to improve Firefox for Android, Eideticker will continue to be useful in making sure that using our browser is the best experience that it can be, especially combined with other tools like Benoit Girard’s sampling profiler.

For more information on Eideticker, feel free to visit its homepage on wiki.mozilla.org.

[ For more information on the Eideticker software I’m referring to, see this entry ]

tl;dr: You can now run the standard eideticker benchmarks easily on any Android phone without any kind of specialized hardware.

So Eideticker is pretty great at comparing relative performance between different browsers and generally measuring things in an absolutely neutral way. Unfortunately it’s a bit of a pain to use it at the moment to catch regressions: the software still has a few bugs and encoding/decoding/analyzing the capture still takes a great deal of time. Not to mention the fact that it currently requires specialized hardware (though that will soon be less of a concern at least inside MoCo, where we have a bunch of Eideticker boxes on order for the Toronto and Mountain View offices).

A few months ago, Chris Lord wrote up some great code to internally measure the amount of checkerboarding going on in Fennec. I’ve thought for a while that it would be a neat idea to hook this up to the Eideticker harness, and today I finally did so. After installing Eideticker, you can now run the benchmark on any machine against an arbitrary fennec build just by typing this from the eideticker root directory:

adb shell setprop log.tag.GeckoLayerRendererProf DEBUG

./bin/get-metric-for-build.py --no-capture --get-internal-checkerboard-stats --num-runs 3 nightly.apk src/tests/scrolling/taskjs.org/index.html

In return, you’ll get some nice clean results like this:

=== Internal Checkerboard Stats (sum of percents, not percentage) ===

[167.34348, 171.871015, 175.3420296]

Just to be sure that the results were comparable, I did a quick set of runs on the Eideticker machine in Mountain View with both internal checkerboard statistics gathering and HDMI capturing enabled.

| Stats file |

HDMI capturing |

| 167.34348 |

177.022 |

| 171.87 |

184.46 |

| 175.34 |

184.44 |

While the results aren’t identical (we measure number of frames differently inside Fennec than we do with Eideticker, for one thing), they do seem roughly correlated. So go forth, benchmark and tweak! 😉

P.S. If you’ve been following mobile automation, you might be asking why I don’t just suggest running Talos and Robocop on your workstation. Can’t they do the same sorts of things? The short answer is that yes, they can, but unfortunately they’re much more involved to set up and use at the moment. Arguably they shouldn’t be, and this is something we (Mozilla tools & automation) need to work on. We’ll get there eventually (and help would be welcome!). For now, hacks like this should help with getting out the first release of Fennec by providing a fast, easy to use tool for bisection and analysis.

[ For more information on the Eideticker software I’m referring to, see this entry ]

Participated in an interesting meeting on checkerboarding in Firefox for Android yesterday. As a reminder, checkerboarding refers to the amount of time you spend waiting to see the full page after you do a swipe on your mobile device, and it’s a big issue right now : so much so that it puts our delivery goal for the new native browser at risk.

It seems like we have a number of strategies for improving performance which will likely solve the problem, but we need to be able to measure improvements to make sure that we’re making progress. This is one of the places where Eideticker could be useful (especially with regards to measuring us against the competition), though there are a few things that we need to add before it’s going to be as useful as it could be. The most urgent, as I understand, is to come up with a suite of tests which accurately represent the set of pages that we’re having issues with. The current main measure of checkerboarding that we’re using with eideticker is taskjs.org which, while an interesting test case in some ways, doesn’t accurately represent the sort of site that the user would normally go to in the wild (and thus be annoyed by). 😉

This is going to take a few days (and a lot of review: I’m definitely no expert when it comes to this stuff) to get right, but I just added two tests for the New York Times which I think are a step in the right direction of being more representative of real-world use cases. Have a look here:

http://wrla.ch/eideticker/dashboard/#/nytimes-scrolling

http://wrla.ch/eideticker/dashboard/#/nytimes-zooming

The results here actually aren’t as bad as I would have expected/remembered. There amount of checkerboarding after a zoom out is a bit annoying (I understand this a known issue with font caching, or something) but not too terrible. Still, any improvements that show up here will probably apply across a wide variety of sites, as the design patterns on the New York Times site are very common.

(P.S. yes, I know I promised a comparison with Google Chrome for Android last time: rest assured that’s still coming soon!)

[ For more information on the Eideticker software I’m referring to, see this entry ]

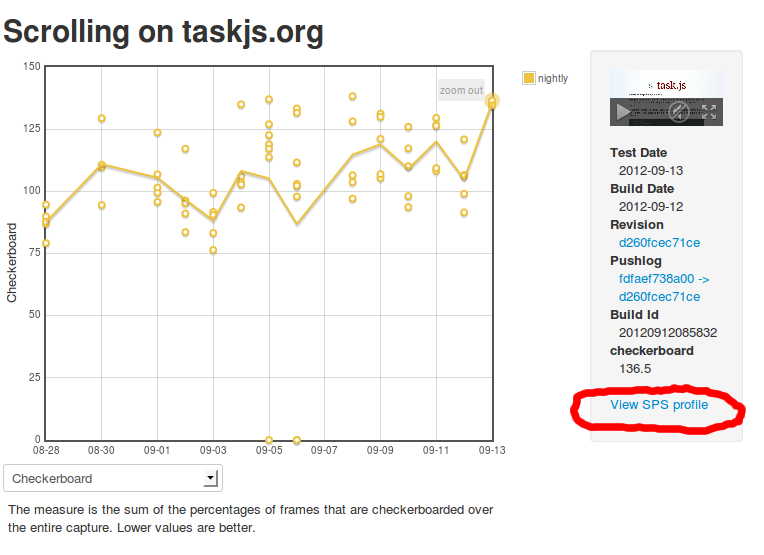

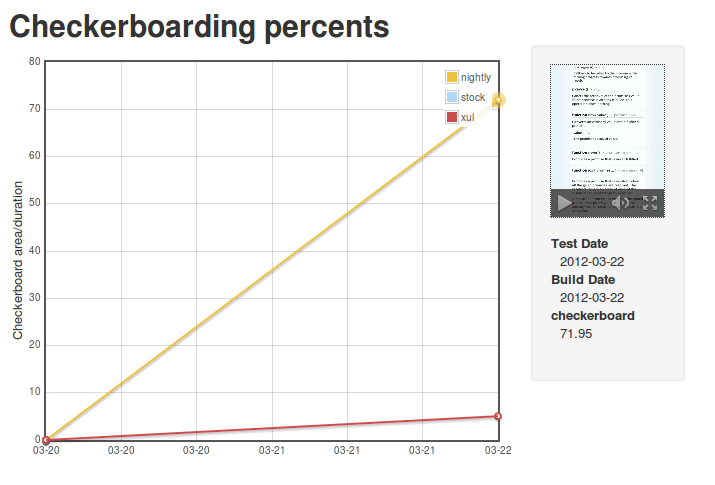

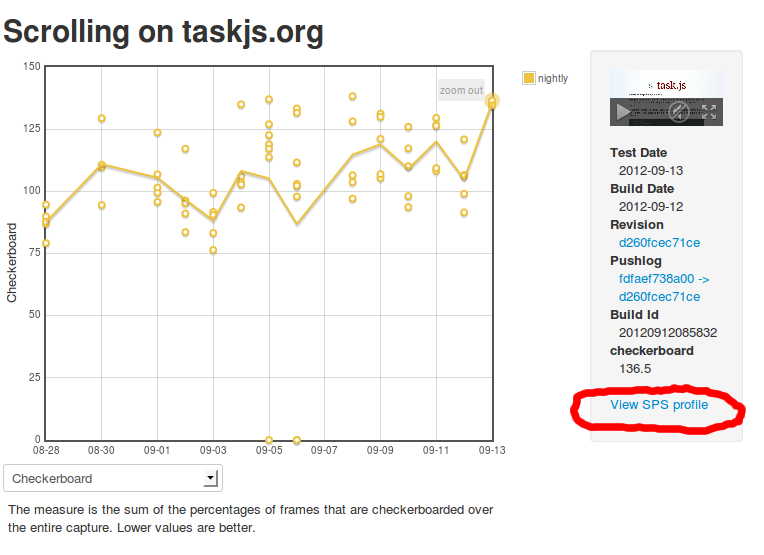

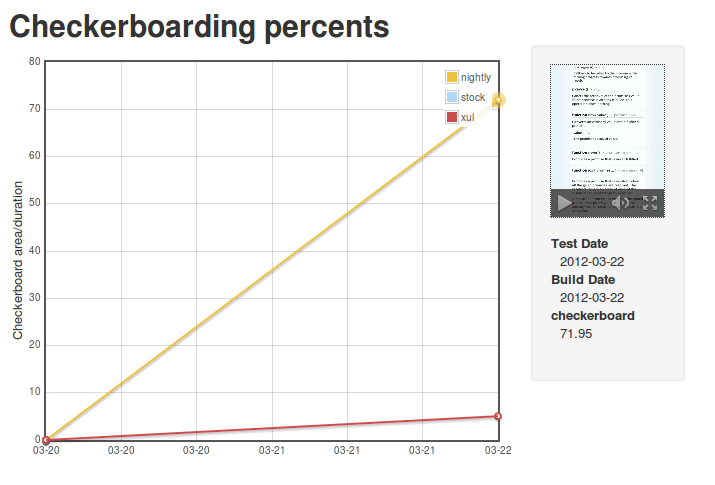

Since my first Eideticker dashboard post was so well received, I thought I’d give a quick update on another metric that I just brought online: checkerboarding (a.k.a. the amount of time you spend waiting to see the full page after you do a swipe on your mobile device).

[ link to real thing ]

Unfortunately the news here is not as good as before: as the numbers indicate, the new Native Fennec currently performs substantially worse than the version in Android market. This is a known issue, and is currently being tracked in bug 719447.

Next up: Seeing how we do against Google Chrome for Android.