Showing posts tagged Eideticker

Over the last while, Clint Talbert and I have been working on setting up automatic mobile performance tests using Eideticker (a framework to measure perceived Firefox performance by video capturing automated browser interactions: for more information, see my earlier post).

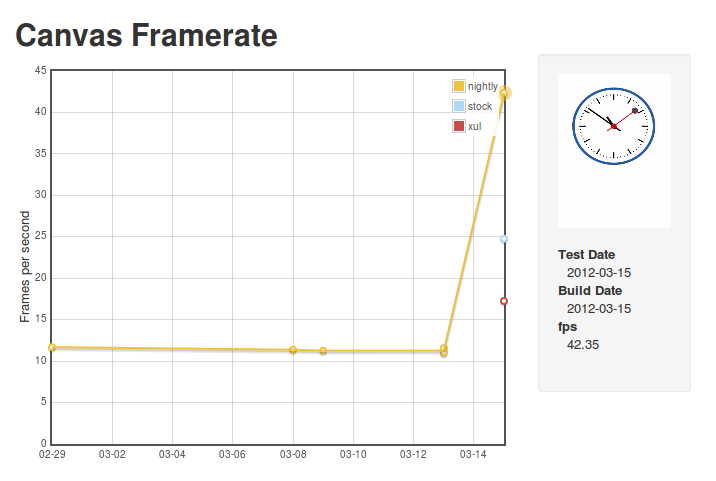

There’s many reasons why this is interesting, but probably the most important one is that it can measure differences reliably across different types of mobile browsers. Currently I’m testing the old XUL fennec, the Android stock browser, and the latest nightlies.

I’m pleased to announce that the first iteration of the dashboard is available for public consumption, on my site.

http://wrla.ch/eideticker/dashboard/#/canvas

The demo is pretty cheesey (just click on any of the datapoints to see the video capture), but nonetheless does seem to illustrate some interesting differences between the three browsers. The big jump in performance for nightly comes from the landing of the Maple branch, which happened earlier this week. Hopefully this validates some of the work that the mobile/graphics team has been doing over the past while. Exciting times!

I’ve been spending a bit more time on refining the checkerboarding tests in Eideticker that I talked about last time. Most of my work has been focused on making the results as representative of a real world scenario as possible, to that effect I’ve been working on:

- Changed the test case from a web site of my own concoction to a more realistic example (the taskjs.org site)

- Use actual Android native events (via MonkeyRunner) to synthesize touch-based scrolling instead of simulating the event in JavaScript (which exercises a completely different codepath).

- Fixing various synchronization issues to make results more repeatable. Before captures were of wildly variable lengths, which made the numbers extremely suspect. There’s probably still a few issues, but much less than before.

The end result of this is a framework that gives much more meaningful results. The bad news is that the results that I’m measuring don’t show a very positive picture for where we’re at with the native re-write of Firefox. Even relative to the version of mobile Firefox which is currently on the Android Market, we still have some catching up to do. Here’s some video of the “old” firefox in action:

And here’s the Native fennec (what we’re currently offering in nightly, with some minor modifications by me to change the way the “checkerboard” is drawn for analysis purposes):

The numbers behind this comparison:

| Platform |

Percent checkerboarding over run of test |

| Old Fennec |

2% |

| Native Fennec |

57% |

(by the way, this performance regression is filed as bug 719447)

I know there’s lots of great effort going into improving this situation, so I have hope that we’ll be doing much better on this metric in the coming days/weeks. The process for creating these videos/analyses is mostly automated at this point, so my plan is to create a small dashboard (ala arewefastyet.com) to measure these numbers over time on the latest nightlies. Stay tuned!

After my post on measuring checkerboarding in mobile Firefox, Clint Talbert (my fearless manager) suggested I run a before and after test to measure the improvement that just landed as part of bug 709512. After a bit of cleanup, I did so, measuring the delta between my build on December 20th and the latest version of Aurora. The difference is pretty remarkable: at least on the LG G2X that I’ve been using for testing, we’ve gone from checkerboarding between 10–20% of the time and not checkerboarding almost at all (in between two runs of the test with the Aurora build, there is exactly one frame that checkerboards). All credit to Chris Lord for that!

See the video evidence for yourself. Before:

After: