Showing posts tagged Free Software

One of my frustrations with the Linux desktop is the lack of an email client that’s in the same league as GMail or Apple’s mail.app. Thunderbird is ok as far as it goes (I use it for my day-to-day Mozilla correspondence) but I miss having a decent conversation view of email (yes, I tried the conversation view extension — while impressive in some ways, it ultimately didn’t work particularly well for me) and the search functionality is rather slow and cumbersome. I’d like to be optimistic about these problems being fixed at some point but after nearly 2 years of using the product without much visible improvement my expectation of that happening is rather low.

The Yorba non-profit recently started a fundraiser to work on the next edition of Geary, an email client which I hope will fill the niche that I’m talking about. It’s pretty rough around the edges still, but even at this early stage the conversation view is beautiful and more or less exactly what I want. The example of Shotwell (their photo management application) suggests that they know a thing or two about creating robust and useable software, not a common thing in this day and age. In any case, their pitch was compelling enough for me to donate a few dollars to the cause. If you care about having a great email experience that is completely under your control (and not that of an advertising or product company with their own agenda), then maybe you could too?

I’ve been spending the last month or so at Mozilla prototyping a new project called Eideticker which aims to use video capture data and image/frame analysis for performance measurement of Firefox Mobile. It’s still in quite a rough state, but it’s now complete enough that I thought it would be worth spending a bit of time describing both its motivation and how it works.

First, a bit of an introduction. Up to now, our automated performance tools have used entirely synthetic benchmarks (how long til we get the onload event? how many ms since we last hit the main loop?) to gather performance information. As we’ve found out, there’s a lot you can measure with synthetic benchmarks. Tools like Talos have proven themselves by catching performance regressions on a very regular basis.

Still, there’s many things that synthetic benchmarks can’t easily or reliably measure. For example, it’s nice to know that a page has triggered an “onload” event (and the sooner it does that, the better), but what does the browser look like before then? If it’s a complicated or image intensive page, it might take 10 or 15 seconds to load. In this interval, user studies have clearly shown that an application displaying something sooner rather than later is always desirable if it’s not possible to display everything immediately (due to network traffic, CPU constraints, whatever). It’s this area of user-perceived performance that Eideticker aims to help with. Eideticker creates a system to capture live data of what the browser is displaying, then performs image/frame analysis on the result to see how we’re actually doing on these inherently subjective metrics. The above was just one example, others might include:

- Measuring amount of time it takes to actually see the start page from time of launch.

- Measuring amount of time you see the checkboard pattern after panning the browser.

- Measuring the visual artifacts while loading a complicated page (how long does it take to display something? how long until we get something close to the final expected result? how long until we get the actual final result?)

It turns out that it’s possible to put together a system that does this type of analysis using off-the-shelf components. We’re still very much in the early phase, but initial signs are promising. The initial test system has the following pieces:

- A Linux workstation equipped with a Decklink extreme 3D video capture card

- An Android phone with HDMI output (currently using the LG G2X)

- A version of talos modified to video capture the results of a test.

- A bit of python code to actually analyze the video capture data.

So far, I’ve got the system working end-to-end for two simple cases. The first is the “pageload” case. This lets you capture the results of loading any page within a talos pageset. Here’s a quick example of the movie we generate from a tsvg test:

Here’s another example, a color cycle test (actually the first test case I created, as a throwaway):

After the video is captured, the next step is to analyze it! As described above (and in further detail on the Eideticker wiki page), there’s lots of things we could measure but the easiest thing is probably just to count the number of unique frames and derive a frame rate for the capture based on that (the higher the better, obviously). Based on an initial prototype from Chris Jones, I’ve started work on a python library to do exactly this. Assuming you have an eideticker capture handy, you can run a tool called “analyze.py” on the command line, and it’ll give you its best guess of the # of unique frames:

<br /> (eideticker)wlach@eideticker:~/src/eideticker$ bin/analyze.py ./src/talos/talos/captures/capture-2011-11-11T11:23:51.627183.zip<br /> Unique frames: 121/272<br />

(There are currently some rough edges with this: we’re doing frame comparisons based on per-pixel changes, but the video capture data is slightly noisy so sometimes a pixel changes its value even when nothing has actually happened in the browser)

So that’s what I’ve got working so far. What’s next? Short term, we have some specific high-level goals about where we want to be with the system by the end of the quarter. The big unfinished pieces are getting an end-to-end test involving real user interaction (typing into the URL bar, etc.) going and turning this prototype system into something that’s easy for others to duplicate and is robust enough to be easily extended. Hopefully this will come together fairly quickly now that the basics are in place.

The longer term picture really depends on feedback from the community. Unlike many of the projects we work on in automation & tools, Eideticker is not meant to be something that’s run on every checkin. Rather, it’s intended to be a useful tool that can be run on an as needed basis by developers and QA. We obviously have our own ideas on how something like this might be useful (and what a reasonable user interface might be), but I’ve found in cases like this it’s much better to go to the people who will actually be using this thing. So with that in mind, here’s a call for feedback. I have two very specific questions:

- Is there a specific problem you’ve been working on that a framework like this might be helpful for?

- What do you think of the current workflow model described in the README?

My goal is to make something that people will love, so please do let me know what you think. Nothing about this project is cast in stone and the last thing I want is to deliver a product that people don’t actually want to use.

Equally, while Eideticker is being written primarily with the goal of making Mobile Firefox better (and in the slightly-less short term, desktop Firefox and Boot to Gecko), much of it is broadly applicable to any user-facing mobile or desktop application. If you think some component of Eideticker might be interesting to your project and want to collaborate, feel free to get in touch.

-

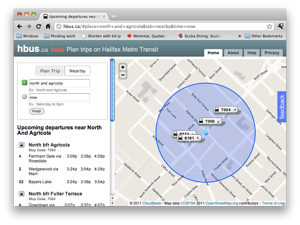

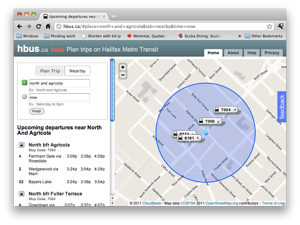

As you may or may not have noticed, hbus.ca has been down for the past few weeks. Halifax updated the data but I didn’t have a chance to update it. Well, I finally did, and hbus is now up in its former glory (minus a small issue with stops named ‘6016_merged_3300509′: thanks Metro Transit, time to update that script to massage your data again!) . I still wonder a bit about why I keep putting time into a site oriented around a city I haven’t lived in for over 2 years now (especially when Google Maps arguably does a better job at most things than I do), but there you go!

-

NIXI is still up and being useful to me, though I’m a little bit disappointed by its uptake from the rest of the world (the site gets like 5–12 unique views a day). What do I need to do for you guys? A mobile version? French localization? I just added support for Washington (Capital Bikeshare) and Boston (Hubway), maybe it’ll get some uptake there.

-

Not really my project, but Stephane Guidoin put up an awesome site called zonecone.ca which helps you find out about traffic obstacles that might delay your journey. It even has a nifty feature where you can create an account, specify a route you take regularly (say, your commute), and it’ll automatically notify you if something pops up. As you may have guessed, I’m not the biggest fan of automobiles, but this is still very cool. 😉 This site was originally based on the map layout template that I announced a few months ago.

-

I have a lettuce plant growing on my new balcony. It’s doing ok, though it will probably have to be brought in soon due to frost. Will it get enough sun? Can I prevent my cat from eating it? Will it make a delicious salad? Stay tuned!

Just a quick note to say that I just opensourced the software behind hbus.ca, nicknamed “Routez” under the Affero GPL. You can get the code on github.

For those new to the project, hbus.ca is a generic trip planning / transit information site for Halifax, Nova Scotia written using the Django web framework. It currently has two main features:

- A trip planning front-end much like Google Transit (built from the ground up using the libroutez library).

- A “nearby” routes feature which gives you all the bus departures near a particular location.

On the backend, both of these features are accessible via JSON APIs, for use in transit apps, etc. Transit to Go uses these to great effect.

There is nothing particularly Halifax-specific to the underlying Routez software, aside from various references in the web front end to Halifax and hbus.ca. In fact, we use Routez to provide information for Transit to Go Edmonton right now, with no modification.

Originally my plan was to release something that was completely generic out of the box so that anyone could trivially make up a version of this site for their favourite city. I’ve made some headway towards that goal over the last week or so, but there’s still some ways to go. There’s basically two major issues:

- The geocoder depends on information gleaned from the geobase road network dataset. The intent behind this is noble (provide an end-to-end solution that doesn’t depend on third parties) but in practice this limits the software’s usefulness. It would be better to optionally allow a Routez-based site to use Google’s geocoder on the front-end. Unfortunately, to comply with Google’s terms of service, we’d also need to use their Maps API for the base map as well. Perhaps the best option here would be to use something like Mapstraction to allow users to select their preferred mapping provider.

- The trip planning software used in the backend, libroutez, is getting a bit long in the tooth and is quite finicky about what kind of data it will accept. I think the long-term solution to this is to switch to Graphserver (which is more mature and better supported), but some features would have to be added to it to support the kind of things that Routez needs (like a list of upcoming departures at a particular transit stop).

Even with these problems, I figured it would be better to open up what I have for people to check out and play with. Have a look and let me know what you think!

A few weekends ago, there was a Montréal Ouvert hackfest at the Notman House. I decided to take a bit of a break from my usual transit hacking and built up a mobile friendly interface to the wonderful Déchets Montréal, which lets residents easily get information on their garbage collection schedule.

The interface is intentionally quite simplistic, the idea being that if you’re accessing the site using a mobile device you’re probably only interested in the collection schedule for the current week and nothing else. If you want something more complicated you probably should just be using the full site.

Anyway, another fun opportunity to play with mobile web technology (a bit of break from my current consulting gig, which is mostly native iPhone apps). A few things that I learned this time around:

- It’s easy to give your application a nice icon when added to the iPhone home screen by using a webpage icon.

- Related to the above, you can give the user a nice hint to add your webpage to their homescreen by using Google’s mobile bookmark bubble library.

- The iPhone’s form interface will persist after pressing “Search” unless you change the focus using an anchor element.

- jQuery is the best thing since sliced bread for dynamic web applications (ok, I actually knew this already but I just can’t get over how great it is).

Thanks muchly to Kent Mewhort, the brains behind Déchets Montréal, for helping me incorporate my work into his Drupal-based site.

Those who’ve known me for a while have probably heard about my first major open source project, libwpd. In a nutshell, it’s a parser for WordPerfect documents with the primary aim of converting them into something usable by the major opensource office programs out there. It’s used by LibreOffice, OpenOffice.org, AbiWord, and KOffice. WordPerfect isn’t the most popular word processor out there, but there’s still quite a number of legacy documents in that format, especially in the legal community (which was almost exclusively using WordPerfect until very recently).

This project goes way back: I started work on it with Marc Maurer way back in 2002 (just after I graduated from University). I put a rather ridiculous amount of unpaid work into it for a few years. WordPerfect’s streaming document format is a bit esoteric to say the least, and figuring out how to map into the document model used by more modern software was a pretty interesting problem. I still remember spending sleepless nights trying to reliably convert WordPerfect’s outlining into structured lists (I mostly succeeded).

Since then, I’ve mostly moved on to other things, leaving the project in the capable hands of Fridrich Strba, who’s been steadily working on adding a number of important features to the library that massively improve import fidelity. I did have time this summer to add page numbering support (thanks to Yam Software for sponsoring that work) and move the project over to git from cvs, but for the most part it’s been his show since late 2004.

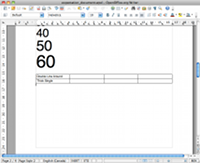

Even if I’m not as actively involved as I once was, when there’s major developments, I still get excited (perhaps in the way that a parent might about a child who’s left the household). And yesterday brought something pretty big: libwpd 0.9.0. With this release, we finally supports graphics (thanks to the work of Fridrich and Ariya Hidayat on libwpg), notes, the page numbering that I mentioned above, and support for encrypted documents. It’s a big deal. Here’s some before and after screenshots:

All this goodness should be available transparently whenever you import a WordPerfect file in an upcoming release of LibreOffice. AbiWord and KOffice filters should come soon enough as well (the updates needed to support libwpd 0.9 are fairly minimal).

Integration with OpenOffice.org is another story. Without going into great amount of detail on the situation (see this article on Ars Technica for the gory details if you’re really interested), it’s quite unlikely that OpenOffice.org WordPerfect support will advance unless (1) someone volunteers to do it and (2) Oracle drops their copyright assignment policy. The chances of these things happening seem rather low to me. My personal recommendation would be to switch to LibreOffice as soon as the first production version is released. I expect it to rapidly overtake OpenOffice.org in functionality due to its more open participation model.