Showing posts tagged Mozilla

A while back, I began work on a new test framework for mobile browsers called Eideticker, which aims to benchmark browsers by capturing them on HMDI video, then running image analysis on the result. I wrote about this in a blog post entitled, “Measuring what the user sees.” Some seven months later, we are about to release a new version of Firefox for Android and Eideticker has played a major role in qualifying its performance and identifying areas for improvement along the way.

I thought it would be worth publicizing some of the results that we have seen so far and explain why Eideticker has been useful. This post aims to explain the ideas behind Eideticker and hopes to inspire ideas on how to further improve objective cross-browser benchmarks.

Idea 1: Put cross-browser performance tests on a more rigorous footing

One of the problems with existing benchmarks is that the graphical performance that they measure is entirely synthetic. So when something like Microsoft’s fishbowl demo claims 50 frames per second, that is based entirely on an internal measurement. There is no guarantee that is what the user is actually seeing. For all we know, it could be throwing half those frames away. To say nothing of the fact that measuring the results could interfere with the results themselves!

With Eideticker, we only analyze what the user sees (under the assumption that what the user sees is what comes out of the device’s HDMI out). To measure frame rate, Eideticker painstakingly analyzes a video capture to see the difference between each frame. Only if one frame is qualitatively different than the one before it will it consider it to be a step forward in the animation progression. There is no room for a browser to “cheat” by, for example, throwing a frame away. Here are two example frames from an Eideticker capture of an animated clock, along with a visual representation of the difference it measured between them:

Note: The red area of the graphic on the right indicates a region that Eideticker has detected as having changed between the two images. The black area of the graphic indicates a region that is unchanged.

Seeing visual evidence like this increases our confidence that things are truly better than they were before. For example, Eideticker very clearly, and accurately, measured the improvement when Off Main Thread Compositing landed. We saw a big performance jump in the afore-mentioned clock benchmark:

Note: Nightly = the new Firefox for Android as of that date (an incremental step towards what was just released), XUL = Previous Firefox for Android, Stock = Default browser that comes with Android 2.2.

Idea 2: Measure subjective performance based on actual user interaction patterns

Measuring real browser output is useful, but the problem is that these benchmarks do not actually measure how a user experiences the Web. Does an animated image of a clock or a fishtank actually represent a normal user experience? Thanks to the development of mobile gaming, this is slowly changing, but I was still say “no.” The majority of users time spent mobile browsing is spent on news websites, Wikipedia, Facebook and I Can Haz Cheezburger. For these sites, I would submit that there are two things users care about:

-

When I swipe to move the content (e.g. to scroll down to see more content on CNN.com), does it respond instantaneously and in a pleasing manner? Do I see a nice smooth animation or a jerky mess?

-

When the content moves, do I see new content right away? Or do I have to wait a few moments while the view updates (this slow load is also called checkerboarding)?

I think the key here is to measure what the user sees. Internal measurements for anything other than what the user experiences are misleading and inconsistent across browsers.

For the first item, I believe the best indication of perceived responsiveness and smoothness is found by measuring the number of frames that are displayed in response to user interaction. As with all measurements, it is not perfect, but one can safely say that if there are only a few frames changed in response to a swipe, the experience is going to be jerky.

Compare these two videos of panning on CNN.com. The first is the previous Firefox for Android, clocking in at about 12.3 frames per second (fps). The second is the recent Firefox for Android , clocking in at 23 fps using Eideticker’s internal measurements:

For the second, we can easily measure how quickly a user sees content by setting the background color of the page to an obvious color (in Eideticker we use magenta), overlaying an image on top, then measuring the amount of the page that is magenta while panning around. Since all modern browsers just use the background color of the page when something is to be redrawn (or at least can be configured that way), it’s easy to compare across browsers. You can see in the videos above that the new Native Firefox does much better than the old one in this regard, at least on this benchmark. Here’s an image of Eideticker detecting checkerboarding on a capture (red indicates checkerboarded areas):

Note: The red area of the graphic on the right indicates a region that Eideticker has detected as checkerboarded (i.e. undrawn). The black area of the graphic indicates a region that is fully drawn.

The important thing in both cases is that these captures represent a real user experience. The swipe gestures are synthesized such that they are interpreted by Android as an actual touch event. This is important: using tricks like javascript scrollTo inside your Web page to pan would not actually engage the renderer in quite the same way. On Firefox for Android (and probably other browsers as well, though I haven’t checked), the response to a touch event is always handled inside the browser internal rendering engine to give the expected “physical feel.” Manually moving the viewport would give completely different results since so much of the fancy code we use to reduce the redraw delay would not be engaged.

Conclusion

Overall, I am very happy with how Eideticker has allowed us to track and measure improvements in Firefox for Android. In developing Eideticker, we aimed to create a benchmark that measured how users actually interact with a browser – not how abstract measurements claim how great a browser is. As we move ahead with new projects to improve Firefox for Android, Eideticker will continue to be useful in making sure that using our browser is the best experience that it can be, especially combined with other tools like Benoit Girard’s sampling profiler.

For more information on Eideticker, feel free to visit its homepage on wiki.mozilla.org.

Recently the Firefox source repository (mozilla-central) was converted over recently to a new license with a lovely short boilerplate. This is great, but here in automation and tools, we have a fairly large number of projects that live outside of the main tree but for which the new license still applies. A few weeks ago, I wanted to move one our projects over (mozbase), but didn’t want to spend hours manually editing text files. I understand that a script was used to convert mozilla-central, but a quick google didn’t turn it up. [ edit:** thanks to Ed Morley for pointing out to me that it lives here: http://hg.mozilla.org/users/gerv_mozilla.org/relic/ **]

I surely could have asked about where this script is, but this problem gave me an excuse to try something that I’d been meaning to for a while: Facebook’s codemod. Codemod is a neat little command-line utility which aims to help with mass refactorings of code. All you have to do is provide a few regular expressions to replace, and off it goes. I tried it out with mozbase, and the results were great: 5 minutes spent coming up with a regular expression and jiggering with command line options, and the job was done.

I had the desire to do this again today for Eideticker, and decided to document the (extremely simple) process for posterity. I just used this simple command line…

../codemod/src/codemod.py --extensions py -m '# \*\*\*\*\* BEGIN LICENSE.*END LICENSE BLOCK \*\*\*\*\*' '# This Source Code Form is subject to the terms of the Mozilla Public\n# License, v. 2.0. If a copy of the MPL was not distributed with this file,\n# You can obtain one at http://mozilla.org/MPL/2.0/.'

… et voila, out popped shiny new boilerplate.

So yesterday we had a small get-together at my place, which gave me the opportunity to try something I’d been meaning to do for a while: build my own retroscope.

The idea is pretty simple: have a webcam record bits and pieces of a social event, then play them back on-the-spot a few minutes/hours later. I first heard about the concept from reading Nat Friedman’s blog entry from 2005 — if you read that, you see that he just hooked up a video camera to his TiVo. 7 years in the future, laptop webcams are ubiquitous and we have the awesome HTML5 tag, so I figured it would be easy to knock up something interesting in short order with zero custom hardware.

Having only remembered that I wanted to do this about 30 minutes before people were scheduled to start arriving, I didn’t have much time to do anything really perfect. I settled on using this little snippet from stackoverflow to generate short (5 second) movies on my laptop, then used scp to copy them over and display a montage of them in an auto-refreshing webpage on my “television” (which is a Mac-Mini connected to a large computer monitor). Despite being a total hack job, the end result generated much amusement. I think this is a bit different from what Nat originally did (it sounds from his blog like his retroscope played back longer segments), but I think the end result is actually a bit more fun.

Perhaps unfortunately, but probably ultimately for the best, only a few snippets from the actual night got stored away. One example is this gem:

(yes, that handsome fellow with the Pernot is me)

I thought it might be fun to release the slightly-cleaned up results of this experiment as opensource for others to play with, so I created a small project for it on github. Unlike the original version, no complicated scp scheme is required — I just reused Joel Maher’s most excellent mozhttpd library from mozbase to run a web server in the same process as the capture logic. All you need to do is run the server on a Linux machine with a webcam and connect to it with a web browser from any other machine on your local network.

https://github.com/wlach/retroscope

Enjoy!

Ok, this is somewhat mundane, but I’ve already had to do it twice (and helped someone do something similar on #mobile), so I figured I might as well blog about it for posterity.

For various automation tasks (notably the Eideticker dashboard and the cross-browser startup tests), we need to be able to launch an Android browser on the command line (via adb shell or our own custom SUTAgent). This is a bit of a black art, but you can find references on how to do this on stackoverflow and other places. The magic incantation is:

am start -a android.intent.action.VIEW -n <application/intent> -d <url>

So, for example, to launch Fennec, you’d run this on the Android command prompt:

am start -a android.intent.action.VIEW -n org.mozilla.fennec/.App -d http://mygreatsite.info

Ok, easy enough, but what if we want to launch a new browser that we just downloaded (e.g. Google Chrome)? Where do we get the application and intent names?

The short answer is that you need to reach into the apk and dig. 😉 There’s probably many ways of doing this, but here’s what I do (which has the distinct advantage of not needing to compile, download or run weird java applications):

-

Copy the apk onto your machine (the apk should be in /data/app: if you have a rooted phone, you should be able to copy that off to your machine).

-

Extract AndroidManifest.xml from the apk (it’s just a .zip) and run axml2xml.pl on it.

-

Examine the resultant xml file and look for the tag. It should have a property called which is the package name. For example:

We can see pretty clearly that the application name in this case is com.android.chrome (you can also get this by running ps when using the application)

- Finally, look for a tag called with an tag with as the android-name property. Scan up for the overarching activity tag, whose android-name property. This is the activity name. For example:

Likewise here we see that the activity name we want is .Main (which Android explicitly expands out to com.android.chrome.Main)

Armed with this information, you should now have enough information to launch the application. Furthering the example above, here’s how to start Chrome on Android via adb’s shell:

am start -a android.intent.action.VIEW -n com.android.chrome/.Main -d http://mygreatsite.info

Hope this helps someone, somewhere.

[ For more information on the Eideticker software I’m referring to, see this entry ]

tl;dr: You can now run the standard eideticker benchmarks easily on any Android phone without any kind of specialized hardware.

So Eideticker is pretty great at comparing relative performance between different browsers and generally measuring things in an absolutely neutral way. Unfortunately it’s a bit of a pain to use it at the moment to catch regressions: the software still has a few bugs and encoding/decoding/analyzing the capture still takes a great deal of time. Not to mention the fact that it currently requires specialized hardware (though that will soon be less of a concern at least inside MoCo, where we have a bunch of Eideticker boxes on order for the Toronto and Mountain View offices).

A few months ago, Chris Lord wrote up some great code to internally measure the amount of checkerboarding going on in Fennec. I’ve thought for a while that it would be a neat idea to hook this up to the Eideticker harness, and today I finally did so. After installing Eideticker, you can now run the benchmark on any machine against an arbitrary fennec build just by typing this from the eideticker root directory:

adb shell setprop log.tag.GeckoLayerRendererProf DEBUG

./bin/get-metric-for-build.py --no-capture --get-internal-checkerboard-stats --num-runs 3 nightly.apk src/tests/scrolling/taskjs.org/index.html

In return, you’ll get some nice clean results like this:

=== Internal Checkerboard Stats (sum of percents, not percentage) ===

[167.34348, 171.871015, 175.3420296]

Just to be sure that the results were comparable, I did a quick set of runs on the Eideticker machine in Mountain View with both internal checkerboard statistics gathering and HDMI capturing enabled.

| Stats file |

HDMI capturing |

| 167.34348 |

177.022 |

| 171.87 |

184.46 |

| 175.34 |

184.44 |

While the results aren’t identical (we measure number of frames differently inside Fennec than we do with Eideticker, for one thing), they do seem roughly correlated. So go forth, benchmark and tweak! 😉

P.S. If you’ve been following mobile automation, you might be asking why I don’t just suggest running Talos and Robocop on your workstation. Can’t they do the same sorts of things? The short answer is that yes, they can, but unfortunately they’re much more involved to set up and use at the moment. Arguably they shouldn’t be, and this is something we (Mozilla tools & automation) need to work on. We’ll get there eventually (and help would be welcome!). For now, hacks like this should help with getting out the first release of Fennec by providing a fast, easy to use tool for bisection and analysis.

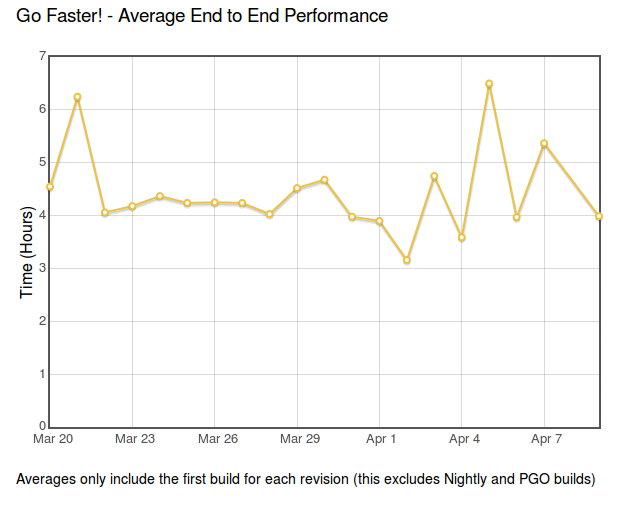

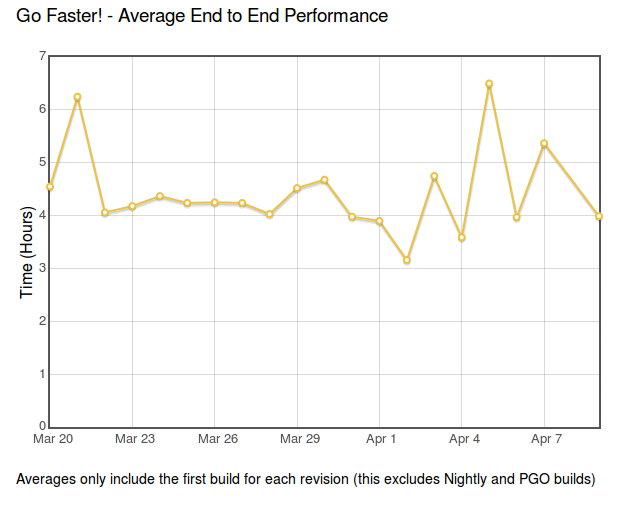

Build times for mozilla-central are a major factor in developer productivity. Faster build times mean more people using try (reducing breakage) and more fine-grained regression ranges (reducing the impact of breakages). As a side benefit, it allows us to avoid buying and maintaining more hardware (or put new hardware to better use). About a half-year ago, we set up a project called BuildFaster to try to bring these times down, setting the ambitious goal of getting build times (from checkin to tests done) down to 2 hours. We didn’t quite succeed, though we did make some major strides. As part of this project, we also developed a dashboard to track our progress and narrow down the major bottlenecks which were keeping up our build times.

Unfortunately, this dashboard went down earlier this year with the rest of Brasstacks and we hadn’t had the chance to bring it back up. I’m pleased to announce that thanks to Jonathan Griffin, it’s finally back online.

While no one is actively working on build performance at the moment (at least to my knowledge), it’s still useful to keep track of build times to make sure that we don’t regress. Anecdotally, it has seemed to me that the time needed to get results from try has been pretty stable over the last while, and this is borne out by the results:

As the cliche goes: no news is good news.

[ For more information on the Eideticker software I’m referring to, see this entry ]

Participated in an interesting meeting on checkerboarding in Firefox for Android yesterday. As a reminder, checkerboarding refers to the amount of time you spend waiting to see the full page after you do a swipe on your mobile device, and it’s a big issue right now : so much so that it puts our delivery goal for the new native browser at risk.

It seems like we have a number of strategies for improving performance which will likely solve the problem, but we need to be able to measure improvements to make sure that we’re making progress. This is one of the places where Eideticker could be useful (especially with regards to measuring us against the competition), though there are a few things that we need to add before it’s going to be as useful as it could be. The most urgent, as I understand, is to come up with a suite of tests which accurately represent the set of pages that we’re having issues with. The current main measure of checkerboarding that we’re using with eideticker is taskjs.org which, while an interesting test case in some ways, doesn’t accurately represent the sort of site that the user would normally go to in the wild (and thus be annoyed by). 😉

This is going to take a few days (and a lot of review: I’m definitely no expert when it comes to this stuff) to get right, but I just added two tests for the New York Times which I think are a step in the right direction of being more representative of real-world use cases. Have a look here:

http://wrla.ch/eideticker/dashboard/#/nytimes-scrolling

http://wrla.ch/eideticker/dashboard/#/nytimes-zooming

The results here actually aren’t as bad as I would have expected/remembered. There amount of checkerboarding after a zoom out is a bit annoying (I understand this a known issue with font caching, or something) but not too terrible. Still, any improvements that show up here will probably apply across a wide variety of sites, as the design patterns on the New York Times site are very common.

(P.S. yes, I know I promised a comparison with Google Chrome for Android last time: rest assured that’s still coming soon!)

Just thought I’d mention this because I found it handy.

A while back AaronMT wrote up some clever instructions on taking Android screenshots by dumping the contents of ‘/dev/fb0’ and running ffmpeg on the results. This is useful, but you need to know the resolution of the device you have connected to pass the right arguments to ffmpeg. Wouldn’t it be better if you had just one script that would work for whatever device you had plugged in?

In fact, there is a way to do this using the monkeyrunner utility. Intended mainly as a tool for synthesizing input on Android (more on that some other time), you can also easily get a capture of the Android screen with its python/jython API (assuming you have the Android SDK installed). Here’s a quick script which does the job:

from com.android.monkeyrunner import MonkeyRunner, MonkeyDevice

import os

import sys

if len(sys.argv) != 2:

print "Usage: %s <filename>" % os.path.basename(sys.argv[0])

sys.exit(1)

device = MonkeyRunner.waitForConnection()

result = device.takeSnapshot()

result.writeToFile(sys.argv[1], 'png')

Copy that into a file called capture.py (or whatever), then run it like so:

<br /> monkeyrunner capture.py screenshot.png<br />

And you’re off to the races! Nice screenshot, no utilities or non-essential command line arguments required!

(credit to this stackoverflow answer for the idea)

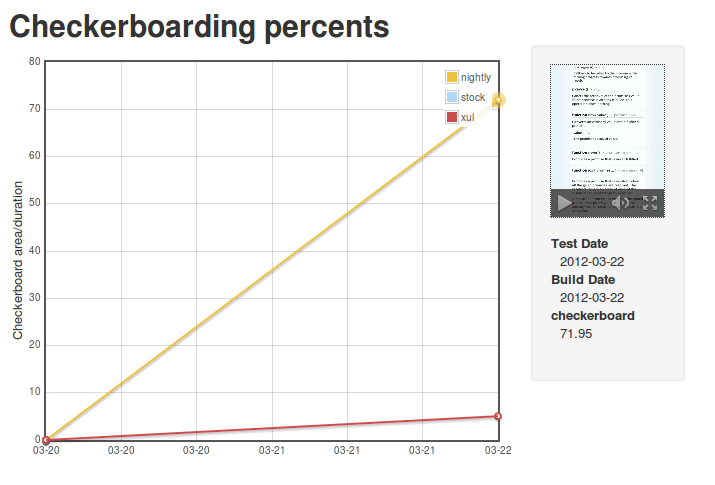

[ For more information on the Eideticker software I’m referring to, see this entry ]

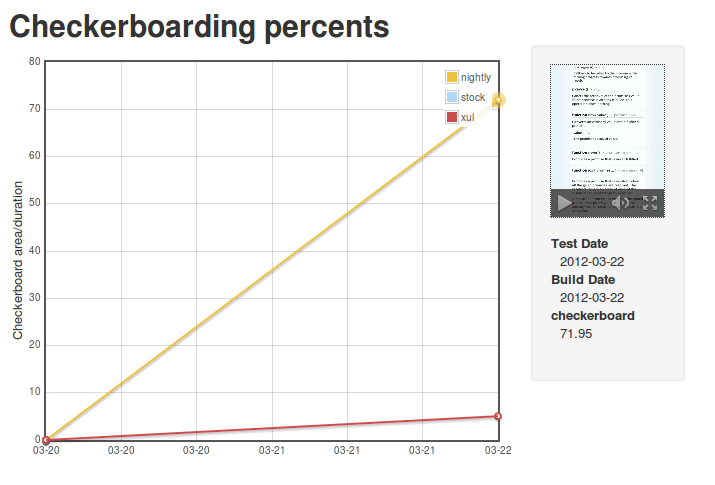

Since my first Eideticker dashboard post was so well received, I thought I’d give a quick update on another metric that I just brought online: checkerboarding (a.k.a. the amount of time you spend waiting to see the full page after you do a swipe on your mobile device).

[ link to real thing ]

Unfortunately the news here is not as good as before: as the numbers indicate, the new Native Fennec currently performs substantially worse than the version in Android market. This is a known issue, and is currently being tracked in bug 719447.

Next up: Seeing how we do against Google Chrome for Android.

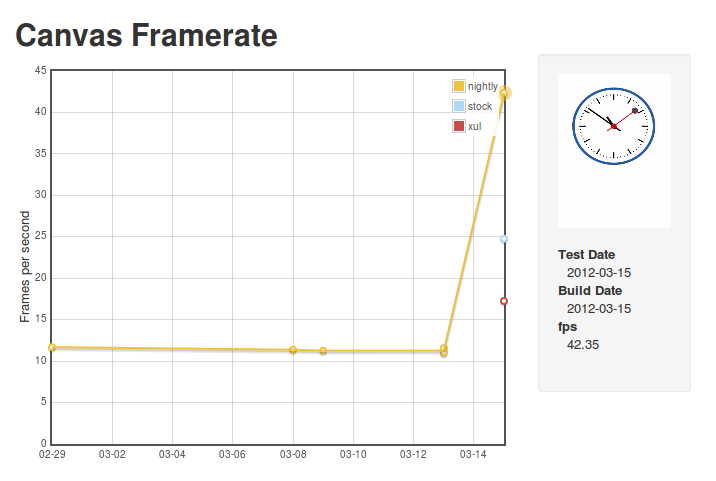

Over the last while, Clint Talbert and I have been working on setting up automatic mobile performance tests using Eideticker (a framework to measure perceived Firefox performance by video capturing automated browser interactions: for more information, see my earlier post).

There’s many reasons why this is interesting, but probably the most important one is that it can measure differences reliably across different types of mobile browsers. Currently I’m testing the old XUL fennec, the Android stock browser, and the latest nightlies.

I’m pleased to announce that the first iteration of the dashboard is available for public consumption, on my site.

http://wrla.ch/eideticker/dashboard/#/canvas

The demo is pretty cheesey (just click on any of the datapoints to see the video capture), but nonetheless does seem to illustrate some interesting differences between the three browsers. The big jump in performance for nightly comes from the landing of the Maple branch, which happened earlier this week. Hopefully this validates some of the work that the mobile/graphics team has been doing over the past while. Exciting times!