Just before I leave for some Christmas vacation, it’s time for another update on the state of Eideticker. Since I last blogged about the software, I’ve been working on the following three areas:

- Coming up with better algorithm (green screen / red screen) for both determining the area of the capture as well as the start/end of the capture. The harness was already flood filling the area with these colours at the beginning/end of the capture, but now we’re actually using this information. The code’s a little hacky, but it seems to work well enough for the test cases I’ve been using so far.

- As a demonstration, I wrote up a quick test that demonstrates checkerboarding on mobile Fennec, and wrote up a quick bit of analysis code to detect this pattern and give an overall measure of how much this test “checkerboards” (i.e. has regions that are not fully painted when the user scrolls). As I understand this is an area that our mobile team is currently working on this problem quite a bit, it will be interesting to watch the numbers given by this test and see if things improve.

- It’s a minor thing, but you can now view a complete webm movie of the captured movie right from the web interface.

Here’s a quick demonstration video that shows all the above in action. As before, you might want to watch this full screen:

Happy holidays!

The AMT released their GTFS schedule information to the public earlier this week, which is awesome. Not coincidentally, Montreal is going to have a Transportation Camp tomorrow, wherein people will hack on transportation software and discuss open data issues.

GTFS information is useful and standard, but in its raw form it can be a bit difficult to wrangle with. So in advance of the event, I thought it might be helpful/useful to put a simple JSON API to the data, based on my routez software. Should be useful for creating an app or two! There’s two endpoints that are currently defined:

/api/v1/stop/<stop code>/upcoming_stoptimes

This will give a set of upcoming departures at a particular AMT stop (represented by its code). Example:

http://amt.wrla.ch/blog/api/v1/stop/11260/upcoming_stoptimes

/api/v1/place/<lat,lng>/upcoming_stoptimes?distance=<distance in meters>

This will give a set of AMT stops within range of that endpoint, along with upcoming departures. Example:

http://amt.wrla.ch/blog/api/v1/place/45.49640,%20-73.57567/upcoming_stoptimes?distance=1000

So I got some nice feedback on my Eideticker post yesterday on various channels. It seems like some people are interested in hacking on the analysis portion, so I thought I’d give some quick pointers and suggestions of things to look at.

- As I mentioned yesterday, the frame analysis is rather stupid. We need to come up with a better algorithm for disambiguating input noise (small fluctuations in the HDMI signal?) from actual changes in the page. Unfortunately the breadth of things that Eideticker’s meant to analyze makes this a bit difficult. I.e. edge detection probably wouldn’t work for something like Microsoft’s psychedelic browsing demo. I suspect the best route here is to put some work into better understanding the nature of this “noise” and finding a way to filter it out explicitly.

- Our analysis code is still rather slow, and is crying out to be parallelized (either by using multiple cores of the same CPU or a GPU). Burak Yiğit Kaya recommended I look into PyCuda which looks interesting. It looks like there are other possibilities as well though.

- Clipping capture by green screen/red screen. This should be doable by writing some relatively simple code to detect large amounts of green and red and then ignoring previous/current/subsequent frames as appropriate.

- Moar test cases! It was initially suggested to use some of the classic benchmarks, but these only seem to barely work on Fennec (at least with the setup I have). I don’t know if this is fixable or not, but until it is, we might be better off coming up with more reasonable/realistics measures of visual performance.

You might be able to find other inspiration on the Eideticker project page (note that some of this is out of date).

You obviously need the decklink card to perform captures, but the analysis portion of Eideticker can be used/modified on any machine running Linux (Mac should also work, but is untested). To get up and running, just follow the instructions in README.md, dump a pregenerated capture into the captures/ directory (here’s one of a clock), and off you go! The actual analysis code (such as it is) is currently located in src/videocapture/videocapture/capture.py while the web interface is in https://github.com/mozilla/eideticker/blob/master/src/webapp.

I’m going to be out later today (Friday), but I’m mostly around on IRC M-F 9ish–5ish EST on irc.mozilla.org #ateam as `wlach`. Feel free to pester me with questions!

P.S. I didn’t really cover infrastructure/automation portions above as I suspect people will find that less interesting (especially without a video capture card to test with), but you can look at my newsgroup post from yesterday if you want to see what I’ll likely be up to over the next few weeks.

Since I last blogged about Eideticker, I’ve made some good progress. Here’s some highlights:

- Eideticker has a new, much simpler harness and tests are much easier to write. Initially, I was using Talos for this task with the idea that it’s better not to have duplicate code where it’s not really required. Seemed like a fine idea in principle, but in practice Talos’s architecture (which is really oriented around running a large sequence of tests and uploading the results to a central server) was difficult to extend to do what we need to do. At heart, eideticker really only needs to do a few things right now (start up Firefox, start videocapture, load a webpage, stop videocapture) so it’s best to keep things simple.

- I’ve reworked the capture analysis API to use numpy behind the scenes. It’s still not quite as fast as I would like (doing a framediff analysis on a 30 second animation still takes a minute or so on my fast machine), but we’re doing an order of magnitude better than before. numpy also seem to have quite the library of routines for doing the types of matrix algebra useful in image analysis, which should be helpful as the project progresses.

- I added the beginnings of a fancy pants web interface for browsing captures and doing visualizations on them! I’m pretty happy with how this is turning out so far, it’s already been an incredibly useful tool for debugging Eideticker’s analysis system and I think it will be equally useful for understanding Firefox’s behaviour in general.

Here’s an example analysis session, where I examine a ~60 second capture of the fishtank demo from Microsoft, borrowed from Mark Cote’s speedtest library. You might want to view this fullscreen:

A few interesting things to note about this capture:

- Our frame comparison algorithm is still comparatively dumb, it just computes the norm of the difference in RGB values between two frames. Since there’s a (very tiny) amount of noise in the capture, we have to use a threshold to determine whether two frames are the same or not. For all that, the FPS estimate it comes with for the fishtank demo seems about right (and unfortunately at 2 fps, it’s not particularly good).

- I added a green screen / red screen at the start / end of every capture to eliminate race conditions with starting the capture, but haven’t yet actually taken those frames out of the analysis.

- If you look carefully at the animation, not all of the fish that should be displaying in the demo are. I think this has to do with the new native version of Fennec that I’m using to test (old versions don’t exhibit this property). I filed a bug for this.

What’s next? Well, as I mentioned last time, the real goal is to create a tool that developers will find useful. To that end, we have plans to set up an Eideticker machine in Mozilla Mountain View office that more people can use (either locally or remotely over the VPN). For this to be workable, I need to figure out how to get the full setup working on “demand”. Most of the setup already allows this, with one big exception: the actual Android device that we want to capture video from. The LG G2X that I’m currently using works fine when I have physical access to it, but as far as I can tell it’s not possible to get it outputting proper video of an application unless it’s in an unlocked state (which it obviously isn’t most of the time).

My current thinking is that a Panda Board running a Vanilla version of Android might be a good candidate for a permanently-connected device. It is capable of HDMI output, doesn’t have unwanted the bells and whistles of a physical phone (e.g. a lock screen), and should be much reliable due to its physical networking. So far I haven’t had much luck getting it the video output working with the Decklink capture card, but I’ve only just started trying. Work will continue.

If I can somehow figure that out, and smooth out some of the rough edges with the web interface and capture API, I think the stage will be set for us all to do some pretty interesting stuff! Looking forward to it.

Just a quick note that a planet for Mozilla Tools & Automation (the so-called “a team”) is now up, thanks to Reed Loden. With the exception of Jeff Hammel, everyone there was already being syndicated on Planet Mozilla, but this should offer a more focused feed of our doings for those who can’t always keep up with the firehose. Have a look:

http://planet.mozilla.org/ateam

Who should care? Well, we maintain all the major testing frameworks like Mochitest, Reftest, and Talos as well as automated tooling for QA like Mozmill. Our latest work is focused on making sure that Firefox is as robust, responsive, and performant as possible on desktop and mobile. In short, if you’re writing or verifying code from mozilla-central, what we’re doing probably affects you. Please let us know what you think about our projects and whether there’s anything we can do to make your job easier: we’re listening.

Quick bonus note: It’s not immediately obvious (or at least it wasn’t to me), but Mozilla has some fairly finely tuned infrastructure for running planets. If your team or group wants one, it’s definitely better to plug into that instead of rolling your own. 😉 Reed Loden is the maintainer and the source lives in subversion.

It’s kind of rare for me to pimp out products and services on my blog, but I’m going to do so just this once.

I really despise faxes, but occasionally I do have to send them in the course of some of the admin work I still do on the side for my old consulting company (address changes, etc.). If you’re in a similar boat, you really owe it to yourself to check out hellofax.com. It’s about three gazillion times better than any other similar service that I’ve tried (which were all embarassingly bad: I’d sooner just go to a copy shop) and has saved me hours and hours of time and frustration.

I’ve been spending the last month or so at Mozilla prototyping a new project called Eideticker which aims to use video capture data and image/frame analysis for performance measurement of Firefox Mobile. It’s still in quite a rough state, but it’s now complete enough that I thought it would be worth spending a bit of time describing both its motivation and how it works.

First, a bit of an introduction. Up to now, our automated performance tools have used entirely synthetic benchmarks (how long til we get the onload event? how many ms since we last hit the main loop?) to gather performance information. As we’ve found out, there’s a lot you can measure with synthetic benchmarks. Tools like Talos have proven themselves by catching performance regressions on a very regular basis.

Still, there’s many things that synthetic benchmarks can’t easily or reliably measure. For example, it’s nice to know that a page has triggered an “onload” event (and the sooner it does that, the better), but what does the browser look like before then? If it’s a complicated or image intensive page, it might take 10 or 15 seconds to load. In this interval, user studies have clearly shown that an application displaying something sooner rather than later is always desirable if it’s not possible to display everything immediately (due to network traffic, CPU constraints, whatever). It’s this area of user-perceived performance that Eideticker aims to help with. Eideticker creates a system to capture live data of what the browser is displaying, then performs image/frame analysis on the result to see how we’re actually doing on these inherently subjective metrics. The above was just one example, others might include:

- Measuring amount of time it takes to actually see the start page from time of launch.

- Measuring amount of time you see the checkboard pattern after panning the browser.

- Measuring the visual artifacts while loading a complicated page (how long does it take to display something? how long until we get something close to the final expected result? how long until we get the actual final result?)

It turns out that it’s possible to put together a system that does this type of analysis using off-the-shelf components. We’re still very much in the early phase, but initial signs are promising. The initial test system has the following pieces:

- A Linux workstation equipped with a Decklink extreme 3D video capture card

- An Android phone with HDMI output (currently using the LG G2X)

- A version of talos modified to video capture the results of a test.

- A bit of python code to actually analyze the video capture data.

So far, I’ve got the system working end-to-end for two simple cases. The first is the “pageload” case. This lets you capture the results of loading any page within a talos pageset. Here’s a quick example of the movie we generate from a tsvg test:

Here’s another example, a color cycle test (actually the first test case I created, as a throwaway):

After the video is captured, the next step is to analyze it! As described above (and in further detail on the Eideticker wiki page), there’s lots of things we could measure but the easiest thing is probably just to count the number of unique frames and derive a frame rate for the capture based on that (the higher the better, obviously). Based on an initial prototype from Chris Jones, I’ve started work on a python library to do exactly this. Assuming you have an eideticker capture handy, you can run a tool called “analyze.py” on the command line, and it’ll give you its best guess of the # of unique frames:

<br /> (eideticker)wlach@eideticker:~/src/eideticker$ bin/analyze.py ./src/talos/talos/captures/capture-2011-11-11T11:23:51.627183.zip<br /> Unique frames: 121/272<br />

(There are currently some rough edges with this: we’re doing frame comparisons based on per-pixel changes, but the video capture data is slightly noisy so sometimes a pixel changes its value even when nothing has actually happened in the browser)

So that’s what I’ve got working so far. What’s next? Short term, we have some specific high-level goals about where we want to be with the system by the end of the quarter. The big unfinished pieces are getting an end-to-end test involving real user interaction (typing into the URL bar, etc.) going and turning this prototype system into something that’s easy for others to duplicate and is robust enough to be easily extended. Hopefully this will come together fairly quickly now that the basics are in place.

The longer term picture really depends on feedback from the community. Unlike many of the projects we work on in automation & tools, Eideticker is not meant to be something that’s run on every checkin. Rather, it’s intended to be a useful tool that can be run on an as needed basis by developers and QA. We obviously have our own ideas on how something like this might be useful (and what a reasonable user interface might be), but I’ve found in cases like this it’s much better to go to the people who will actually be using this thing. So with that in mind, here’s a call for feedback. I have two very specific questions:

- Is there a specific problem you’ve been working on that a framework like this might be helpful for?

- What do you think of the current workflow model described in the README?

My goal is to make something that people will love, so please do let me know what you think. Nothing about this project is cast in stone and the last thing I want is to deliver a product that people don’t actually want to use.

Equally, while Eideticker is being written primarily with the goal of making Mobile Firefox better (and in the slightly-less short term, desktop Firefox and Boot to Gecko), much of it is broadly applicable to any user-facing mobile or desktop application. If you think some component of Eideticker might be interesting to your project and want to collaborate, feel free to get in touch.

Got this exceedingly strange e-mail yesterday. It’s almost a bit tragic. Anyone else seen gotten this?

(BTW, the writing samples, which I won’t link to, weren’t half bad)

Hi there,

Hope keeping well. I’m just getting in touch to ask if you’re open to reviewing content from freelance writers at Ginger Tea and Channa Masala : if so, I’d love to put together a high-quality article written specifically for the site. I’m 29 and have been working as a professional writer and researcher for five years now, and in that time there isn’t a lot I haven’t already covered (I’ve attached a few samples below for you to check out).

As long as you’re happy with the resulting material, you’d be welcome to publish it as you see fit and the content will be owned by you entirely (in that I won’t send it to anyone else, either before or after publication).

There is absolutely no charge for this and no strings attached; the only thing I would ask in return is that I’m able to include a link to a site of my choosing within the article : nothing shady or unethical, just one of the professional businesses I freelance for.

Do let me know if you’re interested, and if so I can get something written for you over the course of the next few days. Needless to say, the offer is open to any other sites you might own as well as wrla.ch/blog. I appreciate you may not be interested in this kind of mutual back-scratching however, so if I don’t hear from you, no offence taken and I won’t trouble you again.

Very best,

XXXXXX

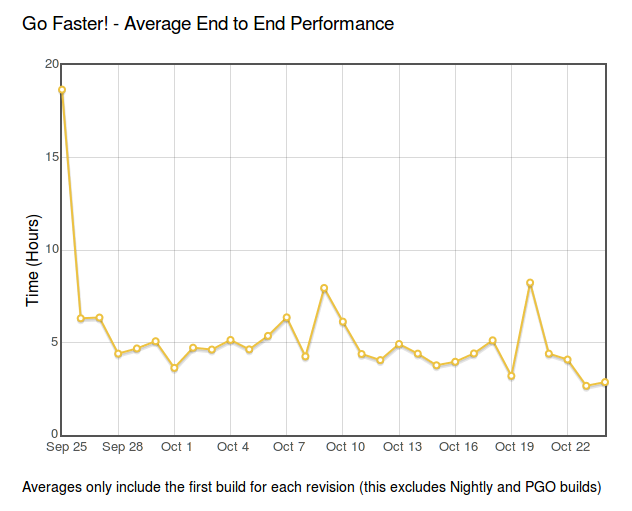

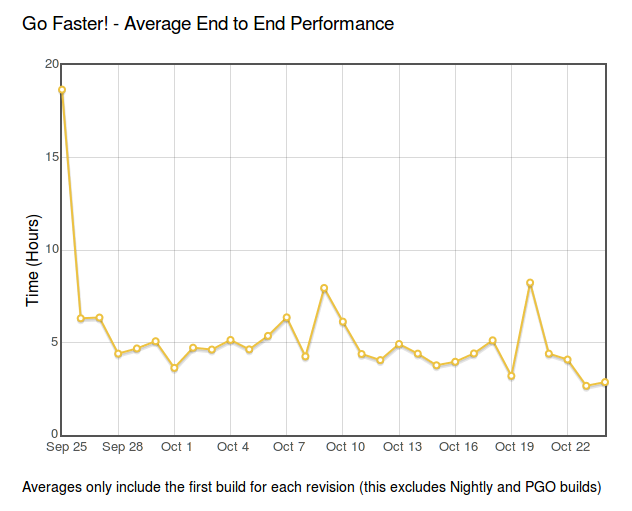

So as others have been posting about, we’ve been making some headway on our progress on the GoFaster project. Unfortunately it seems like we’re still some distance away from reaching our magic number of a 2 hour turnaround for each revision pushed.

It’s a bit hard to see the exact number on the graph (someone should fix that), but we seem to teetering around an average of 3 hours at this point. Looking at our build charts, it seems like the critical path has shifted in many cases from Windows to MacOS X. Is there something we can do to close the gap there? Or is there a more general fix which would lead to substantial savings? If you have any thoughts, or would like to help out, we’re scheduled to have a short meeting tomorrow.

Anyone is welcome to join, but note that we’re practical, results-oriented people. Crazy ideas are fun, but we’re most interested in proposals that have measurable data behind them and can be implemented in reasonable amounts of time.

Despite making a dramatic shift from front-end development to back-end stuff since I started at Mozilla a few months ago, I’ve still had occasion to have to do a fair bit of user-facing code, even if an audience of other developers is a bit more limited than what I’ve been used to. Since my mission is to make the rest of Mozilla more productive, it’s worth putting a bit of time and intention into the user interface for my stuff. If I can reduce learning curves or streamline day-to-day workflows, that’s a win for everyone since they can spend that much more time rocking at their jobs (whether that be release engineering, platform work, or whatever). This brings up a point that I’ve had in the back of my mind for a while:

Despite conventional wisdom, developers can design half-decent user interfaces (if they try)!

I used to be certain that a project really needed graphic designers and/or usability experts to provide guidance on UI issues, but my experience over the last few years with iOS/web development has made me reconsider. Sure, pixel pushing and vector art is never going to be a programmer’s strong suit (and there’s certain high-level techniques that take years of study to acquire/understand), but the basic principles behind good UI design are accessible to anyone. There’s really only three core skills:

-

An ability to put yourself in the shoes of the user. Who are you designing for, and what are they trying to accomplish? How can I streamline my UI to help them quickly solve the task at hand? This is one of the reasons why I find user stories so helpful.

-

An understanding of common vocabulary for describing/designing applications and knowing what is “good”. Unfortunately I haven’t found anything like this for the web, but Apple’s human interface guidelines have some good general advice on this (just ignore the stuff specific to phones/tablet apps if that’s not what you’re doing).

-

A willingness to iterate. The best ideas usually aren’t apparent immediately, and may only come out of a back forth. It’s been my experience that the more constructive dialog there is between people actively involved in the project on user experience issues, the better the end result is likely to be.

For example, one of the things that release engineering has found most useful in the GoFaster Dashboard has been the build charts. Believe it or not, the idea for that view started out as this useless piece of junk (I can say that because I created it). It was only after a good half hour back and forth on irc between myself, jgriffin, and jmaher (all of us backend/tool developers) that we came up with the view that inspired so much good analysis on the project.

All this is not to say that usability experts and graphic designers don’t have special skills that are worthy of respect. Indeed, if you’re a designer and would like to get involved with our work, please join us, we’d love your help. My only point is that on a project where a design resource isn’t available, thinking explicitly about usability is still worthwhile. And even where you have a UX expert on staff, programmers can have useful feedback too. Good UI is everyone’s responsibility!